Merge dreambooth and finetuning in one repo to align with kohya_ss new repo (#10)

* Merge both dreambooth and finetune back in one repo

This commit is contained in:

parent

b78df38979

commit

706dfe157f

@ -1,21 +0,0 @@

|

||||

{

|

||||

"architectures": [

|

||||

"BertModel"

|

||||

],

|

||||

"attention_probs_dropout_prob": 0.1,

|

||||

"hidden_act": "gelu",

|

||||

"hidden_dropout_prob": 0.1,

|

||||

"hidden_size": 768,

|

||||

"initializer_range": 0.02,

|

||||

"intermediate_size": 3072,

|

||||

"layer_norm_eps": 1e-12,

|

||||

"max_position_embeddings": 512,

|

||||

"model_type": "bert",

|

||||

"num_attention_heads": 12,

|

||||

"num_hidden_layers": 12,

|

||||

"pad_token_id": 0,

|

||||

"type_vocab_size": 2,

|

||||

"vocab_size": 30524,

|

||||

"encoder_width": 768,

|

||||

"add_cross_attention": true

|

||||

}

|

||||

195

README.md

195

README.md

@ -1,194 +1,13 @@

|

||||

# HOWTO

|

||||

# Kohya's dreambooth and finetuning

|

||||

|

||||

This repo provide all the required config to run the Dreambooth version found in this note: https://note.com/kohya_ss/n/nee3ed1649fb6

|

||||

The setup of bitsandbytes with Adam8bit support for windows: https://note.com/kohya_ss/n/n47f654dc161e

|

||||

This repo now combine bot Kohya_ss solution under one roof. I am merging both under a single repo to align with the new official kohya repo where he will maintain his code from now on: https://github.com/kohya-ss/sd-scripts

|

||||

|

||||

## Required Dependencies

|

||||

A new note accompaning the release of his new repo can be found here: https://note.com/kohya_ss/n/nba4eceaa4594

|

||||

|

||||

Python 3.10.6 and Git:

|

||||

## Dreambooth

|

||||

|

||||

- Python 3.10.6: https://www.python.org/ftp/python/3.10.6/python-3.10.6-amd64.exe

|

||||

- git: https://git-scm.com/download/win

|

||||

You can find the dreambooth solution spercific [Dreambooth README](README_dreambooth.md)

|

||||

|

||||

Give unrestricted script access to powershell so venv can work:

|

||||

## Finetune

|

||||

|

||||

- Open an administrator powershell window

|

||||

- Type `Set-ExecutionPolicy Unrestricted` and answer A

|

||||

- Close admin powershell window

|

||||

|

||||

## Installation

|

||||

|

||||

Open a regular Powershell terminal and type the following inside:

|

||||

|

||||

```powershell

|

||||

git clone https://github.com/bmaltais/kohya_ss.git

|

||||

cd kohya_ss

|

||||

|

||||

python -m venv --system-site-packages venv

|

||||

.\venv\Scripts\activate

|

||||

|

||||

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

||||

pip install --upgrade -r requirements.txt

|

||||

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

||||

|

||||

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

||||

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

||||

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

||||

|

||||

accelerate config

|

||||

|

||||

```

|

||||

|

||||

Answers to accelerate config:

|

||||

|

||||

```txt

|

||||

- 0

|

||||

- 0

|

||||

- NO

|

||||

- NO

|

||||

- All

|

||||

- fp16

|

||||

```

|

||||

|

||||

### Optional: CUDNN 8.6

|

||||

|

||||

This step is optional but can improve the learning speed for NVidia 4090 owners...

|

||||

|

||||

Due to the filesize I can't host the DLLs needed for CUDNN 8.6 on Github, I strongly advise you download them for a speed boost in sample generation (almost 50% on 4090) you can download them from here: https://b1.thefileditch.ch/mwxKTEtelILoIbMbruuM.zip

|

||||

|

||||

To install simply unzip the directory and place the cudnn_windows folder in the root of the kohya_diffusers_fine_tuning repo.

|

||||

|

||||

Run the following command to install:

|

||||

|

||||

```

|

||||

python cudann_1.8_install.py

|

||||

```

|

||||

|

||||

## Upgrade

|

||||

|

||||

When a new release comes out you can upgrade your repo with the following command:

|

||||

|

||||

```powershell

|

||||

cd kohya_ss

|

||||

git pull

|

||||

.\venv\Scripts\activate

|

||||

pip install --upgrade -r requirements.txt

|

||||

```

|

||||

|

||||

Once the commands have completed successfully you should be ready to use the new version.

|

||||

|

||||

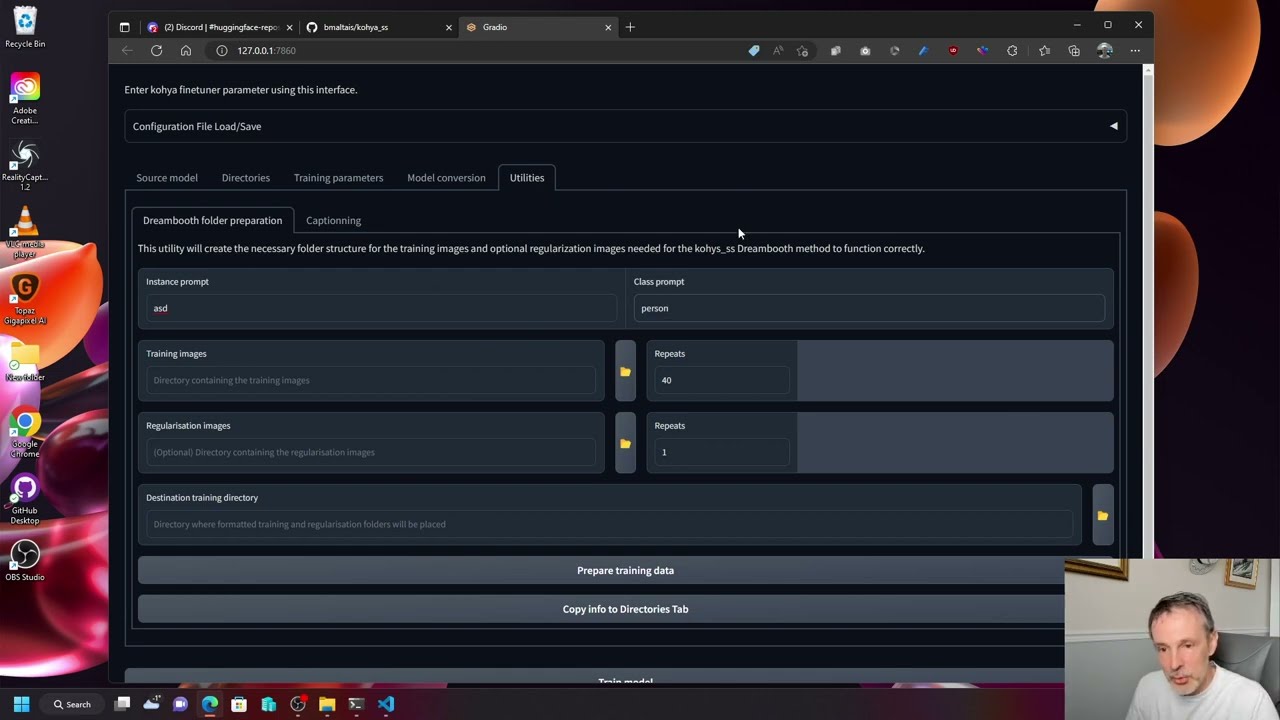

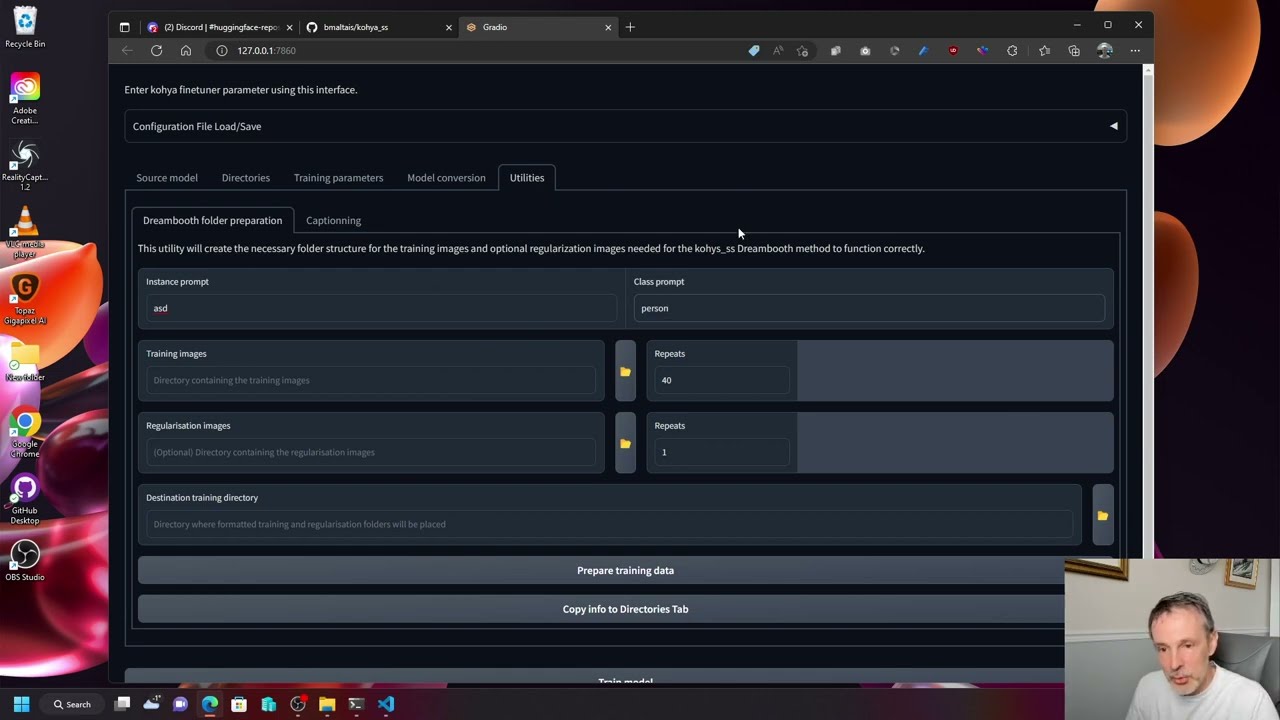

## GUI

|

||||

|

||||

There is now support for GUI based training using gradio. You can start the GUI interface by running:

|

||||

|

||||

```powershell

|

||||

python .\dreambooth_gui.py

|

||||

```

|

||||

|

||||

## Quickstart screencast

|

||||

|

||||

You can find a screen cast on how to use the GUI at the following location:

|

||||

|

||||

[](https://www.youtube.com/watch?v=RlvqEKj03WI)

|

||||

|

||||

## Folders configuration

|

||||

|

||||

Refer to the note to understand how to create the folde structure. In short it should look like:

|

||||

|

||||

```

|

||||

<arbitrary folder name>

|

||||

|- <arbitrary class folder name>

|

||||

|- <repeat count>_<class>

|

||||

|- <arbitrary training folder name>

|

||||

|- <repeat count>_<token> <class>

|

||||

```

|

||||

|

||||

Example for `asd dog` where `asd` is the token word and `dog` is the class. In this example the regularization `dog` class images contained in the folder will be repeated only 1 time and the `asd dog` images will be repeated 20 times:

|

||||

|

||||

```

|

||||

my_asd_dog_dreambooth

|

||||

|- reg_dog

|

||||

|- 1_dog

|

||||

`- reg_image_1.png

|

||||

`- reg_image_2.png

|

||||

...

|

||||

`- reg_image_256.png

|

||||

|- train_dog

|

||||

|- 20_asd dog

|

||||

`- dog1.png

|

||||

...

|

||||

`- dog8.png

|

||||

```

|

||||

|

||||

## Support

|

||||

|

||||

Drop by the discord server for support: https://discord.com/channels/1041518562487058594/1041518563242020906

|

||||

|

||||

## Contributors

|

||||

|

||||

- Lord of the universe - cacoe (twitter: @cac0e)

|

||||

|

||||

## Change history

|

||||

|

||||

* 12/19 (v18.4) update:

|

||||

- Add support for shuffle_caption, save_state, resume, prior_loss_weight under "Advanced Configuration" section

|

||||

- Fix issue with open/save config not working properly

|

||||

* 12/19 (v18.3) update:

|

||||

- fix stop encoder training issue

|

||||

* 12/19 (v18.2) update:

|

||||

- Fix file/folder opening behind the browser window

|

||||

- Add WD14 and BLIP captioning to utilities

|

||||

- Improve overall GUI layout

|

||||

* 12/18 (v18.1) update:

|

||||

- Add Stable Diffusion model conversion utility. Make sure to run `pip upgrade -U -r requirements.txt` after updating to this release as this introduce new pip requirements.

|

||||

* 12/17 (v18) update:

|

||||

- Save model as option added to train_db_fixed.py

|

||||

- Save model as option added to GUI

|

||||

- Retire "Model conversion" parameters that was essentially performing the same function as the new `--save_model_as` parameter

|

||||

* 12/17 (v17.2) update:

|

||||

- Adding new dataset balancing utility.

|

||||

* 12/17 (v17.1) update:

|

||||

- Adding GUI for kohya_ss called dreambooth_gui.py

|

||||

- removing support for `--finetuning` as there is now a dedicated python repo for that. `--fine-tuning` is still there behind the scene until kohya_ss remove it in a future code release.

|

||||

- removing cli examples as I will now focus on the GUI for training. People who prefer cli based training can still do that.

|

||||

* 12/13 (v17) update:

|

||||

- Added support for learning to fp16 gradient (experimental function). SD1.x can be trained with 8GB of VRAM. Specify full_fp16 options.

|

||||

* 12/06 (v16) update:

|

||||

- Added support for Diffusers 0.10.2 (use code in Diffusers to learn v-parameterization).

|

||||

- Diffusers also supports safetensors.

|

||||

- Added support for accelerate 0.15.0.

|

||||

* 12/05 (v15) update:

|

||||

- The script has been divided into two parts

|

||||

- Support for SafeTensors format has been added. Install SafeTensors with `pip install safetensors`. The script will automatically detect the format based on the file extension when loading. Use the `--use_safetensors` option if you want to save the model as safetensor.

|

||||

- The vae option has been added to load a VAE model separately.

|

||||

- The log_prefix option has been added to allow adding a custom string to the log directory name before the date and time.

|

||||

* 11/30 (v13) update:

|

||||

- fix training text encoder at specified step (`--stop_text_encoder_training=<step #>`) that was causing both Unet and text encoder training to stop completely at the specified step rather than continue without text encoding training.

|

||||

* 11/29 (v12) update:

|

||||

- stop training text encoder at specified step (`--stop_text_encoder_training=<step #>`)

|

||||

- tqdm smoothing

|

||||

- updated fine tuning script to support SD2.0 768/v

|

||||

* 11/27 (v11) update:

|

||||

- DiffUsers 0.9.0 is required. Update with `pip install --upgrade -r requirements.txt` in the virtual environment.

|

||||

- The way captions are handled in DreamBooth has changed. When a caption file existed, the file's caption was added to the folder caption until v10, but from v11 it is only the file's caption. Please be careful.

|

||||

- Fixed a bug where prior_loss_weight was applied to learning images. Sorry for the inconvenience.

|

||||

- Compatible with Stable Diffusion v2.0. Add the `--v2` option. If you are using `768-v-ema.ckpt` or `stable-diffusion-2` instead of `stable-diffusion-v2-base`, add `--v_parameterization` as well. Learn more about other options.

|

||||

- Added options related to the learning rate scheduler.

|

||||

- You can download and use DiffUsers models directly from Hugging Face. In addition, DiffUsers models can be saved during training.

|

||||

* 11/21 (v10):

|

||||

- Added minimum/maximum resolution specification when using Aspect Ratio Bucketing (min_bucket_reso/max_bucket_reso option).

|

||||

- Added extension specification for caption files (caption_extention).

|

||||

- Added support for images with .webp extension.

|

||||

- Added a function that allows captions to learning images and regularized images.

|

||||

* 11/18 (v9):

|

||||

- Added support for Aspect Ratio Bucketing (enable_bucket option). (--enable_bucket)

|

||||

- Added support for selecting data format (fp16/bf16/float) when saving checkpoint (--save_precision)

|

||||

- Added support for saving learning state (--save_state, --resume)

|

||||

- Added support for logging (--logging_dir)

|

||||

* 11/14 (diffusers_fine_tuning v2):

|

||||

- script name is now fine_tune.py.

|

||||

- Added option to learn Text Encoder --train_text_encoder.

|

||||

- The data format of checkpoint at the time of saving can be specified with the --save_precision option. You can choose float, fp16, and bf16.

|

||||

- Added a --save_state option to save the learning state (optimizer, etc.) in the middle. It can be resumed with the --resume option.

|

||||

* 11/9 (v8): supports Diffusers 0.7.2. To upgrade diffusers run `pip install --upgrade diffusers[torch]`

|

||||

* 11/7 (v7): Text Encoder supports checkpoint files in different storage formats (it is converted at the time of import, so export will be in normal format). Changed the average value of EPOCH loss to output to the screen. Added a function to save epoch and global step in checkpoint in SD format (add values if there is existing data). The reg_data_dir option is enabled during fine tuning (fine tuning while mixing regularized images). Added dataset_repeats option that is valid for fine tuning (specified when the number of teacher images is small and the epoch is extremely short).

|

||||

You can find the finetune solution spercific [Finetune README](README_finetune.md)

|

||||

203

README_dreambooth.md

Normal file

203

README_dreambooth.md

Normal file

@ -0,0 +1,203 @@

|

||||

# Kohya_ss Dreambooth

|

||||

|

||||

This repo provide all the required code to run the Dreambooth version found in this note: https://note.com/kohya_ss/n/nee3ed1649fb6

|

||||

|

||||

## Required Dependencies

|

||||

|

||||

Python 3.10.6 and Git:

|

||||

|

||||

- Python 3.10.6: https://www.python.org/ftp/python/3.10.6/python-3.10.6-amd64.exe

|

||||

- git: https://git-scm.com/download/win

|

||||

|

||||

Give unrestricted script access to powershell so venv can work:

|

||||

|

||||

- Open an administrator powershell window

|

||||

- Type `Set-ExecutionPolicy Unrestricted` and answer A

|

||||

- Close admin powershell window

|

||||

|

||||

## Installation

|

||||

|

||||

Open a regular Powershell terminal and type the following inside:

|

||||

|

||||

```powershell

|

||||

git clone https://github.com/bmaltais/kohya_ss.git

|

||||

cd kohya_ss

|

||||

|

||||

python -m venv --system-site-packages venv

|

||||

.\venv\Scripts\activate

|

||||

|

||||

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

||||

pip install --upgrade -r requirements.txt

|

||||

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

||||

|

||||

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

||||

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

||||

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

||||

|

||||

accelerate config

|

||||

|

||||

```

|

||||

|

||||

Answers to accelerate config:

|

||||

|

||||

```txt

|

||||

- 0

|

||||

- 0

|

||||

- NO

|

||||

- NO

|

||||

- All

|

||||

- fp16

|

||||

```

|

||||

|

||||

### Optional: CUDNN 8.6

|

||||

|

||||

This step is optional but can improve the learning speed for NVidia 4090 owners...

|

||||

|

||||

Due to the filesize I can't host the DLLs needed for CUDNN 8.6 on Github, I strongly advise you download them for a speed boost in sample generation (almost 50% on 4090) you can download them from here: https://b1.thefileditch.ch/mwxKTEtelILoIbMbruuM.zip

|

||||

|

||||

To install simply unzip the directory and place the cudnn_windows folder in the root of the kohya_diffusers_fine_tuning repo.

|

||||

|

||||

Run the following command to install:

|

||||

|

||||

```

|

||||

python .\tools\cudann_1.8_install.py

|

||||

```

|

||||

|

||||

## Upgrade

|

||||

|

||||

When a new release comes out you can upgrade your repo with the following command:

|

||||

|

||||

```

|

||||

.\upgrade.bat

|

||||

```

|

||||

|

||||

or you can do it manually with

|

||||

|

||||

```powershell

|

||||

cd kohya_ss

|

||||

git pull

|

||||

.\venv\Scripts\activate

|

||||

pip install --upgrade -r requirements.txt

|

||||

```

|

||||

|

||||

Once the commands have completed successfully you should be ready to use the new version.

|

||||

|

||||

## GUI

|

||||

|

||||

There is now support for GUI based training using gradio. You can start the GUI interface by running:

|

||||

|

||||

```powershell

|

||||

.\dreambooth.bat

|

||||

```

|

||||

|

||||

## CLI

|

||||

|

||||

You can find various examples of how to leverage the fine_tune.py in this folder: https://github.com/bmaltais/kohya_ss/tree/master/examples

|

||||

|

||||

## Quickstart screencast

|

||||

|

||||

You can find a screen cast on how to use the GUI at the following location:

|

||||

|

||||

[](https://www.youtube.com/watch?v=RlvqEKj03WI)

|

||||

|

||||

## Folders configuration

|

||||

|

||||

Refer to the note to understand how to create the folde structure. In short it should look like:

|

||||

|

||||

```

|

||||

<arbitrary folder name>

|

||||

|- <arbitrary class folder name>

|

||||

|- <repeat count>_<class>

|

||||

|- <arbitrary training folder name>

|

||||

|- <repeat count>_<token> <class>

|

||||

```

|

||||

|

||||

Example for `asd dog` where `asd` is the token word and `dog` is the class. In this example the regularization `dog` class images contained in the folder will be repeated only 1 time and the `asd dog` images will be repeated 20 times:

|

||||

|

||||

```

|

||||

my_asd_dog_dreambooth

|

||||

|- reg_dog

|

||||

|- 1_dog

|

||||

`- reg_image_1.png

|

||||

`- reg_image_2.png

|

||||

...

|

||||

`- reg_image_256.png

|

||||

|- train_dog

|

||||

|- 20_asd dog

|

||||

`- dog1.png

|

||||

...

|

||||

`- dog8.png

|

||||

```

|

||||

|

||||

## Support

|

||||

|

||||

Drop by the discord server for support: https://discord.com/channels/1041518562487058594/1041518563242020906

|

||||

|

||||

## Contributors

|

||||

|

||||

- Lord of the universe - cacoe (twitter: @cac0e)

|

||||

|

||||

## Change history

|

||||

|

||||

* 12/19 (v18.4) update:

|

||||

- Add support for shuffle_caption, save_state, resume, prior_loss_weight under "Advanced Configuration" section

|

||||

- Fix issue with open/save config not working properly

|

||||

* 12/19 (v18.3) update:

|

||||

- fix stop encoder training issue

|

||||

* 12/19 (v18.2) update:

|

||||

- Fix file/folder opening behind the browser window

|

||||

- Add WD14 and BLIP captioning to utilities

|

||||

- Improve overall GUI layout

|

||||

* 12/18 (v18.1) update:

|

||||

- Add Stable Diffusion model conversion utility. Make sure to run `pip upgrade -U -r requirements.txt` after updating to this release as this introduce new pip requirements.

|

||||

* 12/17 (v18) update:

|

||||

- Save model as option added to train_db_fixed.py

|

||||

- Save model as option added to GUI

|

||||

- Retire "Model conversion" parameters that was essentially performing the same function as the new `--save_model_as` parameter

|

||||

* 12/17 (v17.2) update:

|

||||

- Adding new dataset balancing utility.

|

||||

* 12/17 (v17.1) update:

|

||||

- Adding GUI for kohya_ss called dreambooth_gui.py

|

||||

- removing support for `--finetuning` as there is now a dedicated python repo for that. `--fine-tuning` is still there behind the scene until kohya_ss remove it in a future code release.

|

||||

- removing cli examples as I will now focus on the GUI for training. People who prefer cli based training can still do that.

|

||||

* 12/13 (v17) update:

|

||||

- Added support for learning to fp16 gradient (experimental function). SD1.x can be trained with 8GB of VRAM. Specify full_fp16 options.

|

||||

* 12/06 (v16) update:

|

||||

- Added support for Diffusers 0.10.2 (use code in Diffusers to learn v-parameterization).

|

||||

- Diffusers also supports safetensors.

|

||||

- Added support for accelerate 0.15.0.

|

||||

* 12/05 (v15) update:

|

||||

- The script has been divided into two parts

|

||||

- Support for SafeTensors format has been added. Install SafeTensors with `pip install safetensors`. The script will automatically detect the format based on the file extension when loading. Use the `--use_safetensors` option if you want to save the model as safetensor.

|

||||

- The vae option has been added to load a VAE model separately.

|

||||

- The log_prefix option has been added to allow adding a custom string to the log directory name before the date and time.

|

||||

* 11/30 (v13) update:

|

||||

- fix training text encoder at specified step (`--stop_text_encoder_training=<step #>`) that was causing both Unet and text encoder training to stop completely at the specified step rather than continue without text encoding training.

|

||||

* 11/29 (v12) update:

|

||||

- stop training text encoder at specified step (`--stop_text_encoder_training=<step #>`)

|

||||

- tqdm smoothing

|

||||

- updated fine tuning script to support SD2.0 768/v

|

||||

* 11/27 (v11) update:

|

||||

- DiffUsers 0.9.0 is required. Update with `pip install --upgrade -r requirements.txt` in the virtual environment.

|

||||

- The way captions are handled in DreamBooth has changed. When a caption file existed, the file's caption was added to the folder caption until v10, but from v11 it is only the file's caption. Please be careful.

|

||||

- Fixed a bug where prior_loss_weight was applied to learning images. Sorry for the inconvenience.

|

||||

- Compatible with Stable Diffusion v2.0. Add the `--v2` option. If you are using `768-v-ema.ckpt` or `stable-diffusion-2` instead of `stable-diffusion-v2-base`, add `--v_parameterization` as well. Learn more about other options.

|

||||

- Added options related to the learning rate scheduler.

|

||||

- You can download and use DiffUsers models directly from Hugging Face. In addition, DiffUsers models can be saved during training.

|

||||

* 11/21 (v10):

|

||||

- Added minimum/maximum resolution specification when using Aspect Ratio Bucketing (min_bucket_reso/max_bucket_reso option).

|

||||

- Added extension specification for caption files (caption_extention).

|

||||

- Added support for images with .webp extension.

|

||||

- Added a function that allows captions to learning images and regularized images.

|

||||

* 11/18 (v9):

|

||||

- Added support for Aspect Ratio Bucketing (enable_bucket option). (--enable_bucket)

|

||||

- Added support for selecting data format (fp16/bf16/float) when saving checkpoint (--save_precision)

|

||||

- Added support for saving learning state (--save_state, --resume)

|

||||

- Added support for logging (--logging_dir)

|

||||

* 11/14 (diffusers_fine_tuning v2):

|

||||

- script name is now fine_tune.py.

|

||||

- Added option to learn Text Encoder --train_text_encoder.

|

||||

- The data format of checkpoint at the time of saving can be specified with the --save_precision option. You can choose float, fp16, and bf16.

|

||||

- Added a --save_state option to save the learning state (optimizer, etc.) in the middle. It can be resumed with the --resume option.

|

||||

* 11/9 (v8): supports Diffusers 0.7.2. To upgrade diffusers run `pip install --upgrade diffusers[torch]`

|

||||

* 11/7 (v7): Text Encoder supports checkpoint files in different storage formats (it is converted at the time of import, so export will be in normal format). Changed the average value of EPOCH loss to output to the screen. Added a function to save epoch and global step in checkpoint in SD format (add values if there is existing data). The reg_data_dir option is enabled during fine tuning (fine tuning while mixing regularized images). Added dataset_repeats option that is valid for fine tuning (specified when the number of teacher images is small and the epoch is extremely short).

|

||||

167

README_finetune.md

Normal file

167

README_finetune.md

Normal file

@ -0,0 +1,167 @@

|

||||

# Kohya_ss Finetune

|

||||

|

||||

This python utility provide code to run the diffusers fine tuning version found in this note: https://note.com/kohya_ss/n/nbf7ce8d80f29

|

||||

|

||||

## Required Dependencies

|

||||

|

||||

Python 3.10.6 and Git:

|

||||

|

||||

- Python 3.10.6: https://www.python.org/ftp/python/3.10.6/python-3.10.6-amd64.exe

|

||||

- git: https://git-scm.com/download/win

|

||||

|

||||

Give unrestricted script access to powershell so venv can work:

|

||||

|

||||

- Open an administrator powershell window

|

||||

- Type `Set-ExecutionPolicy Unrestricted` and answer A

|

||||

- Close admin powershell window

|

||||

|

||||

## Installation

|

||||

|

||||

Open a regular Powershell terminal and type the following inside:

|

||||

|

||||

```powershell

|

||||

git clone https://github.com/bmaltais/kohya_diffusers_fine_tuning.git

|

||||

cd kohya_diffusers_fine_tuning

|

||||

|

||||

python -m venv --system-site-packages venv

|

||||

.\venv\Scripts\activate

|

||||

|

||||

pip install torch==1.12.1+cu116 torchvision==0.13.1+cu116 --extra-index-url https://download.pytorch.org/whl/cu116

|

||||

pip install --upgrade -r requirements.txt

|

||||

pip install -U -I --no-deps https://github.com/C43H66N12O12S2/stable-diffusion-webui/releases/download/f/xformers-0.0.14.dev0-cp310-cp310-win_amd64.whl

|

||||

|

||||

cp .\bitsandbytes_windows\*.dll .\venv\Lib\site-packages\bitsandbytes\

|

||||

cp .\bitsandbytes_windows\cextension.py .\venv\Lib\site-packages\bitsandbytes\cextension.py

|

||||

cp .\bitsandbytes_windows\main.py .\venv\Lib\site-packages\bitsandbytes\cuda_setup\main.py

|

||||

|

||||

accelerate config

|

||||

|

||||

```

|

||||

|

||||

Answers to accelerate config:

|

||||

|

||||

```txt

|

||||

- 0

|

||||

- 0

|

||||

- NO

|

||||

- NO

|

||||

- All

|

||||

- fp16

|

||||

```

|

||||

|

||||

### Optional: CUDNN 8.6

|

||||

|

||||

This step is optional but can improve the learning speed for NVidia 4090 owners...

|

||||

|

||||

Due to the filesize I can't host the DLLs needed for CUDNN 8.6 on Github, I strongly advise you download them for a speed boost in sample generation (almost 50% on 4090) you can download them from here: https://b1.thefileditch.ch/mwxKTEtelILoIbMbruuM.zip

|

||||

|

||||

To install simply unzip the directory and place the cudnn_windows folder in the root of the kohya_diffusers_fine_tuning repo.

|

||||

|

||||

Run the following command to install:

|

||||

|

||||

```

|

||||

python .\tools\cudann_1.8_install.py

|

||||

```

|

||||

|

||||

## Upgrade

|

||||

|

||||

When a new release comes out you can upgrade your repo with the following command:

|

||||

|

||||

```

|

||||

.\upgrade.bat

|

||||

```

|

||||

|

||||

or you can do it manually with

|

||||

|

||||

```powershell

|

||||

cd kohya_ss

|

||||

git pull

|

||||

.\venv\Scripts\activate

|

||||

pip install --upgrade -r requirements.txt

|

||||

```

|

||||

|

||||

Once the commands have completed successfully you should be ready to use the new version.

|

||||

|

||||

## Folders configuration

|

||||

|

||||

Simply put all the images you will want to train on in a single directory. It does not matter what size or aspect ratio they have. It is your choice.

|

||||

|

||||

## Captions

|

||||

|

||||

Each file need to be accompanied by a caption file describing what the image is about. For example, if you want to train on cute dog pictures you can put `cute dog` as the caption in every file. You can use the `tools\caption.ps1` sample code to help out with that:

|

||||

|

||||

```powershell

|

||||

$folder = "sample"

|

||||

$file_pattern="*.*"

|

||||

$caption_text="cute dog"

|

||||

|

||||

$files = Get-ChildItem "$folder\$file_pattern" -Include *.png, *.jpg, *.webp -File

|

||||

foreach ($file in $files) {

|

||||

if (-not(Test-Path -Path $folder\"$($file.BaseName).txt" -PathType Leaf)) {

|

||||

New-Item -ItemType file -Path $folder -Name "$($file.BaseName).txt" -Value $caption_text

|

||||

}

|

||||

}

|

||||

|

||||

You can also use the `Captioning` tool found under the `Utilities` tab in the GUI.

|

||||

```

|

||||

|

||||

## GUI

|

||||

|

||||

Support for GUI based training using gradio. You can start the GUI interface by running:

|

||||

|

||||

```powershell

|

||||

.\finetune.bat

|

||||

```

|

||||

|

||||

## CLI

|

||||

|

||||

You can find various examples of how to leverage the fine_tune.py in this folder: https://github.com/bmaltais/kohya_ss/tree/master/examples

|

||||

|

||||

## Support

|

||||

|

||||

Drop by the discord server for support: https://discord.com/channels/1041518562487058594/1041518563242020906

|

||||

|

||||

## Change history

|

||||

|

||||

* 12/20 (v9.6) update:

|

||||

- fix issue with config file save and opening

|

||||

* 12/19 (v9.5) update:

|

||||

- Fix file/folder dialog opening behind the browser window

|

||||

- Update GUI layout to be more logical

|

||||

* 12/18 (v9.4) update:

|

||||

- Add WD14 tagging to utilities

|

||||

* 12/18 (v9.3) update:

|

||||

- Add logging option

|

||||

* 12/18 (v9.2) update:

|

||||

- Add BLIP Captioning utility

|

||||

* 12/18 (v9.1) update:

|

||||

- Add Stable Diffusion model conversion utility. Make sure to run `pip upgrade -U -r requirements.txt` after updating to this release as this introduce new pip requirements.

|

||||

* 12/17 (v9) update:

|

||||

- Save model as option added to fine_tune.py

|

||||

- Save model as option added to GUI

|

||||

- Retirement of cli based documentation. Will focus attention to GUI based training

|

||||

* 12/13 (v8):

|

||||

- WD14Tagger now works on its own.

|

||||

- Added support for learning to fp16 up to the gradient. Go to "Building the environment and preparing scripts for Diffusers for more info".

|

||||

* 12/10 (v7):

|

||||

- We have added support for Diffusers 0.10.2.

|

||||

- In addition, we have made other fixes.

|

||||

- For more information, please see the section on "Building the environment and preparing scripts for Diffusers" in our documentation.

|

||||

* 12/6 (v6): We have responded to reports that some models experience an error when saving in SafeTensors format.

|

||||

* 12/5 (v5):

|

||||

- .safetensors format is now supported. Install SafeTensors as "pip install safetensors". When loading, it is automatically determined by extension. Specify use_safetensors options when saving.

|

||||

- Added an option to add any string before the date and time log directory name log_prefix.

|

||||

- Cleaning scripts now work without either captions or tags.

|

||||

* 11/29 (v4):

|

||||

- DiffUsers 0.9.0 is required. Update as "pip install -U diffusers[torch]==0.9.0" in the virtual environment, and update the dependent libraries as "pip install --upgrade -r requirements.txt" if other errors occur.

|

||||

- Compatible with Stable Diffusion v2.0. Add the --v2 option when training (and pre-fetching latents). If you are using 768-v-ema.ckpt or stable-diffusion-2 instead of stable-diffusion-v2-base, add --v_parameterization as well when learning. Learn more about other options.

|

||||

- The minimum resolution and maximum resolution of the bucket can be specified when pre-fetching latents.

|

||||

- Corrected the calculation formula for loss (fixed that it was increasing according to the batch size).

|

||||

- Added options related to the learning rate scheduler.

|

||||

- So that you can download and learn DiffUsers models directly from Hugging Face. In addition, DiffUsers models can be saved during training.

|

||||

- Available even if the clean_captions_and_tags.py is only a caption or a tag.

|

||||

- Other minor fixes such as changing the arguments of the noise scheduler during training.

|

||||

* 11/23 (v3):

|

||||

- Added WD14Tagger tagging script.

|

||||

- A log output function has been added to the fine_tune.py. Also, fixed the double shuffling of data.

|

||||

- Fixed misspelling of options for each script (caption_extention→caption_extension will work for the time being, even if it remains outdated).

|

||||

@ -1,3 +0,0 @@

|

||||

# Diffusers Fine Tuning

|

||||

|

||||

Code has been moved to dedicated repo at: https://github.com/bmaltais/kohya_diffusers_fine_tuning

|

||||

1

dreambooth.bat

Normal file

1

dreambooth.bat

Normal file

@ -0,0 +1 @@

|

||||

.\venv\Scripts\python.exe .\dreambooth_gui.py

|

||||

@ -355,7 +355,7 @@ def train_model(

|

||||

lr_warmup_steps = round(float(int(lr_warmup) * int(max_train_steps) / 100))

|

||||

print(f'lr_warmup_steps = {lr_warmup_steps}')

|

||||

|

||||

run_cmd = f'accelerate launch --num_cpu_threads_per_process={num_cpu_threads_per_process} "train_db_fixed.py"'

|

||||

run_cmd = f'accelerate launch --num_cpu_threads_per_process={num_cpu_threads_per_process} "train_db.py"'

|

||||

if v2:

|

||||

run_cmd += ' --v2'

|

||||

if v_parameterization:

|

||||

@ -734,10 +734,10 @@ with interface:

|

||||

shuffle_caption = gr.Checkbox(

|

||||

label='Shuffle caption', value=False

|

||||

)

|

||||

save_state = gr.Checkbox(label='Save state', value=False)

|

||||

save_state = gr.Checkbox(label='Save training state', value=False)

|

||||

with gr.Row():

|

||||

resume = gr.Textbox(

|

||||

label='Resume',

|

||||

label='Resume from saved training state',

|

||||

placeholder='path to "last-state" state folder to resume from',

|

||||

)

|

||||

resume_button = gr.Button('📂', elem_id='open_folder_small')

|

||||

|

||||

@ -32,7 +32,7 @@ Write-Output "Repeats: $repeats"

|

||||

|

||||

.\venv\Scripts\activate

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed-ber.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--train_data_dir=$data_dir `

|

||||

--output_dir=$output_dir `

|

||||

@ -51,7 +51,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

|

||||

# 2nd pass at half the dataset repeat value

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$output_dir"\last.ckpt" `

|

||||

--train_data_dir=$data_dir `

|

||||

--output_dir=$output_dir"2" `

|

||||

@ -68,7 +68,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$([Math]::Ceiling($dataset_repeats/2)) `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed-ber.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$output_dir"\last.ckpt" `

|

||||

--train_data_dir=$data_dir `

|

||||

--output_dir=$output_dir"2" `

|

||||

|

||||

@ -48,7 +48,7 @@ $square_mts = [Math]::Ceiling($square_repeats / $train_batch_size * $epoch)

|

||||

|

||||

.\venv\Scripts\activate

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--train_data_dir=$landscape_data_dir `

|

||||

--output_dir=$landscape_output_dir `

|

||||

@ -65,7 +65,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$landscape_output_dir"\last.ckpt" `

|

||||

--train_data_dir=$portrait_data_dir `

|

||||

--output_dir=$portrait_output_dir `

|

||||

@ -82,7 +82,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$portrait_output_dir"\last.ckpt" `

|

||||

--train_data_dir=$square_data_dir `

|

||||

--output_dir=$square_output_dir `

|

||||

@ -101,7 +101,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

|

||||

# 2nd pass at half the dataset repeat value

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$square_output_dir"\last.ckpt" `

|

||||

--train_data_dir=$landscape_data_dir `

|

||||

--output_dir=$landscape_output_dir"2" `

|

||||

@ -118,7 +118,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$([Math]::Ceiling($dataset_repeats/2)) `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$landscape_output_dir"2\last.ckpt" `

|

||||

--train_data_dir=$portrait_data_dir `

|

||||

--output_dir=$portrait_output_dir"2" `

|

||||

@ -135,7 +135,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$([Math]::Ceiling($dataset_repeats/2)) `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$portrait_output_dir"2\last.ckpt" `

|

||||

--train_data_dir=$square_data_dir `

|

||||

--output_dir=$square_output_dir"2" `

|

||||

|

||||

@ -48,7 +48,7 @@ $square_mts = [Math]::Ceiling($square_repeats / $train_batch_size * $epoch)

|

||||

|

||||

.\venv\Scripts\activate

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--train_data_dir=$landscape_data_dir `

|

||||

--output_dir=$landscape_output_dir `

|

||||

@ -65,7 +65,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--save_half

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$landscape_output_dir"\last.ckpt" `

|

||||

--train_data_dir=$portrait_data_dir `

|

||||

--output_dir=$portrait_output_dir `

|

||||

@ -82,7 +82,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--save_half

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$portrait_output_dir"\last.ckpt" `

|

||||

--train_data_dir=$square_data_dir `

|

||||

--output_dir=$square_output_dir `

|

||||

@ -101,7 +101,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

|

||||

# 2nd pass at half the dataset repeat value

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$square_output_dir"\last.ckpt" `

|

||||

--train_data_dir=$landscape_data_dir `

|

||||

--output_dir=$landscape_output_dir"2" `

|

||||

@ -118,7 +118,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$([Math]::Ceiling($dataset_repeats/2)) `

|

||||

--save_half

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$landscape_output_dir"2\last.ckpt" `

|

||||

--train_data_dir=$portrait_data_dir `

|

||||

--output_dir=$portrait_output_dir"2" `

|

||||

@ -135,7 +135,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process tra

|

||||

--dataset_repeats=$([Math]::Ceiling($dataset_repeats/2)) `

|

||||

--save_half

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--pretrained_model_name_or_path=$portrait_output_dir"2\last.ckpt" `

|

||||

--train_data_dir=$square_data_dir `

|

||||

--output_dir=$square_output_dir"2" `

|

||||

|

||||

@ -1,69 +0,0 @@

|

||||

# This powershell script will create a model using the fine tuning dreambooth method. It will require landscape,

|

||||

# portrait and square images.

|

||||

#

|

||||

# Adjust the script to your own needs

|

||||

|

||||

# Sylvia Ritter

|

||||

# variable values

|

||||

$pretrained_model_name_or_path = "D:\models\v1-5-pruned-mse-vae.ckpt"

|

||||

$train_dir = "D:\dreambooth\train_bernard\v3"

|

||||

$folder_name = "dataset"

|

||||

|

||||

$learning_rate = 1e-6

|

||||

$dataset_repeats = 80

|

||||

$train_batch_size = 6

|

||||

$epoch = 1

|

||||

$save_every_n_epochs=1

|

||||

$mixed_precision="fp16"

|

||||

$num_cpu_threads_per_process=6

|

||||

|

||||

|

||||

# You should not have to change values past this point

|

||||

|

||||

$data_dir = $train_dir + "\" + $folder_name

|

||||

$output_dir = $train_dir + "\model"

|

||||

|

||||

# stop script on error

|

||||

$ErrorActionPreference = "Stop"

|

||||

|

||||

.\venv\Scripts\activate

|

||||

|

||||

$data_dir_buckets = $data_dir + "-buckets"

|

||||

|

||||

python .\diffusers_fine_tuning\create_buckets.py $data_dir $data_dir_buckets --max_resolution "768,512"

|

||||

|

||||

foreach($directory in Get-ChildItem -path $data_dir_buckets -Directory)

|

||||

|

||||

{

|

||||

if (Test-Path -Path $output_dir-$directory)

|

||||

{

|

||||

Write-Host "The folder $output_dir-$directory already exists, skipping bucket."

|

||||

}

|

||||

else

|

||||

{

|

||||

Write-Host $directory

|

||||

$dir_img_num = Get-ChildItem "$data_dir_buckets\$directory" -Recurse -File -Include *.jpg | Measure-Object | %{$_.Count}

|

||||

$repeats = $dir_img_num * $dataset_repeats

|

||||

$mts = [Math]::Ceiling($repeats / $train_batch_size * $epoch)

|

||||

|

||||

Write-Host

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed-ber.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--train_data_dir=$data_dir_buckets\$directory `

|

||||

--output_dir=$output_dir-$directory `

|

||||

--resolution=$directory `

|

||||

--train_batch_size=$train_batch_size `

|

||||

--learning_rate=$learning_rate `

|

||||

--max_train_steps=$mts `

|

||||

--use_8bit_adam `

|

||||

--xformers `

|

||||

--mixed_precision=$mixed_precision `

|

||||

--save_every_n_epochs=$save_every_n_epochs `

|

||||

--fine_tuning `

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--save_precision="fp16"

|

||||

}

|

||||

|

||||

$pretrained_model_name_or_path = "$output_dir-$directory\last.ckpt"

|

||||

}

|

||||

@ -1,72 +0,0 @@

|

||||

# Sylvia Ritter. AKA: by silvery trait

|

||||

|

||||

# variable values

|

||||

$pretrained_model_name_or_path = "D:\models\v1-5-pruned-mse-vae.ckpt"

|

||||

$train_dir = "D:\dreambooth\train_sylvia_ritter\raw_data"

|

||||

$training_folder = "all-images-v3"

|

||||

|

||||

$learning_rate = 5e-6

|

||||

$dataset_repeats = 40

|

||||

$train_batch_size = 6

|

||||

$epoch = 4

|

||||

$save_every_n_epochs=1

|

||||

$mixed_precision="bf16"

|

||||

$num_cpu_threads_per_process=6

|

||||

|

||||

$max_resolution = "768,576"

|

||||

|

||||

# You should not have to change values past this point

|

||||

|

||||

# stop script on error

|

||||

$ErrorActionPreference = "Stop"

|

||||

|

||||

# activate venv

|

||||

.\venv\Scripts\activate

|

||||

|

||||

# create caption json file

|

||||

python D:\kohya_ss\diffusers_fine_tuning\merge_captions_to_metadata.py `

|

||||

--caption_extention ".txt" $train_dir"\"$training_folder $train_dir"\meta_cap.json"

|

||||

|

||||

# create images buckets

|

||||

python D:\kohya_ss\diffusers_fine_tuning\prepare_buckets_latents.py `

|

||||

$train_dir"\"$training_folder `

|

||||

$train_dir"\meta_cap.json" `

|

||||

$train_dir"\meta_lat.json" `

|

||||

$pretrained_model_name_or_path `

|

||||

--batch_size 4 --max_resolution $max_resolution --mixed_precision fp16

|

||||

|

||||

# Get number of valid images

|

||||

$image_num = Get-ChildItem "$train_dir\$training_folder" -Recurse -File -Include *.npz | Measure-Object | %{$_.Count}

|

||||

$repeats = $image_num * $dataset_repeats

|

||||

|

||||

# calculate max_train_set

|

||||

$max_train_set = [Math]::Ceiling($repeats / $train_batch_size * $epoch)

|

||||

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\diffusers_fine_tuning\fine_tune.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--in_json $train_dir"\meta_lat.json" `

|

||||

--train_data_dir=$train_dir"\"$training_folder `

|

||||

--output_dir=$train_dir"\fine_tuned" `

|

||||

--train_batch_size=$train_batch_size `

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--learning_rate=$learning_rate `

|

||||

--max_train_steps=$max_train_set `

|

||||

--use_8bit_adam --xformers `

|

||||

--mixed_precision=$mixed_precision `

|

||||

--save_every_n_epochs=$save_every_n_epochs `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\diffusers_fine_tuning\fine_tune.py `

|

||||

--pretrained_model_name_or_path=$train_dir"\fine_tuned\last.ckpt" `

|

||||

--in_json $train_dir"\meta_lat.json" `

|

||||

--train_data_dir=$train_dir"\"$training_folder `

|

||||

--output_dir=$train_dir"\fine_tuned2" `

|

||||

--train_batch_size=$train_batch_size `

|

||||

--dataset_repeats=$([Math]::Ceiling($dataset_repeats / 2)) `

|

||||

--learning_rate=$learning_rate `

|

||||

--max_train_steps=$([Math]::Ceiling($max_train_set / 2)) `

|

||||

--use_8bit_adam --xformers `

|

||||

--mixed_precision=$mixed_precision `

|

||||

--save_every_n_epochs=$save_every_n_epochs `

|

||||

--save_precision="fp16"

|

||||

153

examples/kohya_finetune.ps1

Normal file

153

examples/kohya_finetune.ps1

Normal file

@ -0,0 +1,153 @@

|

||||

# variables related to the pretrained model

|

||||

$pretrained_model_name_or_path = "D:\models\test\samdoesart2\model\last"

|

||||

$v2 = 1 # set to 1 for true or 0 for false

|

||||

$v_model = 0 # set to 1 for true or 0 for false

|

||||

|

||||

# variables related to the training dataset and output directory

|

||||

$train_dir = "D:\models\test\samdoesart2"

|

||||

$image_folder = "D:\dataset\samdoesart2\raw"

|

||||

$output_dir = "D:\models\test\samdoesart2\model_e2\"

|

||||

$max_resolution = "512,512"

|

||||

|

||||

# variables related to the training process

|

||||

$learning_rate = 1e-6

|

||||

$lr_scheduler = "constant" # Default is constant

|

||||

$lr_warmup = 0 # % of steps to warmup for 0 - 100. Default is 0.

|

||||

$dataset_repeats = 40

|

||||

$train_batch_size = 8

|

||||

$epoch = 1

|

||||

$save_every_n_epochs = 1

|

||||

$mixed_precision = "bf16"

|

||||

$save_precision = "fp16" # use fp16 for better compatibility with auto1111 and other repo

|

||||

$seed = "494481440"

|

||||

$num_cpu_threads_per_process = 6

|

||||

$train_text_encoder = 0 # set to 1 to train text encoder otherwise set to 0

|

||||

|

||||

# variables related to the resulting diffuser model. If input is ckpt or tensors then it is not applicable

|

||||

$convert_to_safetensors = 1 # set to 1 to convert resulting diffuser to ckpt

|

||||

$convert_to_ckpt = 1 # set to 1 to convert resulting diffuser to ckpt

|

||||

|

||||

# other variables

|

||||

$kohya_finetune_repo_path = "D:\kohya_ss"

|

||||

|

||||

### You should not need to change things below

|

||||

|

||||

# Set variables to useful values using ternary operator

|

||||

$v_model = ($v_model -eq 0) ? $null : "--v_parameterization"

|

||||

$v2 = ($v2 -eq 0) ? $null : "--v2"

|

||||

$train_text_encoder = ($train_text_encoder -eq 0) ? $null : "--train_text_encoder"

|

||||

|

||||

# stop script on error

|

||||

$ErrorActionPreference = "Stop"

|

||||

|

||||

# define a list of substrings to search for

|

||||

$substrings_v2 = "stable-diffusion-2-1-base", "stable-diffusion-2-base"

|

||||

|

||||

# check if $v2 and $v_model are empty and if $pretrained_model_name_or_path contains any of the substrings in the v2 list

|

||||

if ($v2 -eq $null -and $v_model -eq $null -and ($substrings_v2 | Where-Object { $pretrained_model_name_or_path -match $_ }).Count -gt 0) {

|

||||

Write-Host("SD v2 model detected. Setting --v2 parameter")

|

||||

$v2 = "--v2"

|

||||

$v_model = $null

|

||||

}

|

||||

|

||||

# define a list of substrings to search for v-objective

|

||||

$substrings_v_model = "stable-diffusion-2-1", "stable-diffusion-2"

|

||||

|

||||

# check if $v2 and $v_model are empty and if $pretrained_model_name_or_path contains any of the substrings in the v_model list

|

||||

elseif ($v2 -eq $null -and $v_model -eq $null -and ($substrings_v_model | Where-Object { $pretrained_model_name_or_path -match $_ }).Count -gt 0) {

|

||||

Write-Host("SD v2 v_model detected. Setting --v2 parameter and --v_parameterization")

|

||||

$v2 = "--v2"

|

||||

$v_model = "--v_parameterization"

|

||||

}

|

||||

|

||||

# activate venv

|

||||

cd $kohya_finetune_repo_path

|

||||

.\venv\Scripts\activate

|

||||

|

||||

# create caption json file

|

||||

if (!(Test-Path -Path $train_dir)) {

|

||||

New-Item -Path $train_dir -ItemType "directory"

|

||||

}

|

||||

|

||||

python $kohya_finetune_repo_path\script\merge_captions_to_metadata.py `

|

||||

--caption_extention ".txt" $image_folder $train_dir"\meta_cap.json"

|

||||

|

||||

# create images buckets

|

||||

python $kohya_finetune_repo_path\script\prepare_buckets_latents.py `

|

||||

$image_folder `

|

||||

$train_dir"\meta_cap.json" `

|

||||

$train_dir"\meta_lat.json" `

|

||||

$pretrained_model_name_or_path `

|

||||

--batch_size 4 --max_resolution $max_resolution --mixed_precision $mixed_precision

|

||||

|

||||

# Get number of valid images

|

||||

$image_num = Get-ChildItem "$image_folder" -Recurse -File -Include *.npz | Measure-Object | % { $_.Count }

|

||||

|

||||

$repeats = $image_num * $dataset_repeats

|

||||

Write-Host("Repeats = $repeats")

|

||||

|

||||

# calculate max_train_set

|

||||

$max_train_set = [Math]::Ceiling($repeats / $train_batch_size * $epoch)

|

||||

Write-Host("max_train_set = $max_train_set")

|

||||

|

||||

$lr_warmup_steps = [Math]::Round($lr_warmup * $max_train_set / 100)

|

||||

Write-Host("lr_warmup_steps = $lr_warmup_steps")

|

||||

|

||||

Write-Host("$v2 $v_model")

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process $kohya_finetune_repo_path\script\fine_tune.py `

|

||||

$v2 `

|

||||

$v_model `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--in_json $train_dir\meta_lat.json `

|

||||

--train_data_dir="$image_folder" `

|

||||

--output_dir=$output_dir `

|

||||

--train_batch_size=$train_batch_size `

|

||||

--dataset_repeats=$dataset_repeats `

|

||||

--learning_rate=$learning_rate `

|

||||

--lr_scheduler=$lr_scheduler `

|

||||

--lr_warmup_steps=$lr_warmup_steps `

|

||||

--max_train_steps=$max_train_set `

|

||||

--use_8bit_adam `

|

||||

--xformers `

|

||||

--mixed_precision=$mixed_precision `

|

||||

--save_every_n_epochs=$save_every_n_epochs `

|

||||

--seed=$seed `

|

||||

$train_text_encoder `

|

||||

--save_precision=$save_precision

|

||||

|

||||

# check if $output_dir\last is a directory... therefore it is a diffuser model

|

||||

if (Test-Path "$output_dir\last" -PathType Container) {

|

||||

if ($convert_to_ckpt) {

|

||||

Write-Host("Converting diffuser model $output_dir\last to $output_dir\last.ckpt")

|

||||

python "$kohya_finetune_repo_path\tools\convert_diffusers20_original_sd.py" `

|

||||

$output_dir\last `

|

||||

$output_dir\last.ckpt `

|

||||

--$save_precision

|

||||

}

|

||||

if ($convert_to_safetensors) {

|

||||

Write-Host("Converting diffuser model $output_dir\last to $output_dir\last.safetensors")

|

||||

python "$kohya_finetune_repo_path\tools\convert_diffusers20_original_sd.py" `

|

||||

$output_dir\last `

|

||||

$output_dir\last.safetensors `

|

||||

--$save_precision

|

||||

}

|

||||

}

|

||||

|

||||

# define a list of substrings to search for inference file

|

||||

$substrings_sd_model = ".ckpt", ".safetensors"

|

||||

$matching_extension = foreach ($ext in $substrings_sd_model) {

|

||||

Get-ChildItem $output_dir -File | Where-Object { $_.Extension -contains $ext }

|

||||

}

|

||||

|

||||

if ($matching_extension.Count -gt 0) {

|

||||

# copy the file named "v2-inference.yaml" from the "v2_inference" folder to $output_dir as last.yaml

|

||||

if ( $v2 -ne $null -and $v_model -ne $null) {

|

||||

Write-Host("Saving v2-inference-v.yaml as $output_dir\last.yaml")

|

||||

Copy-Item -Path "$kohya_finetune_repo_path\v2_inference\v2-inference-v.yaml" -Destination "$output_dir\last.yaml"

|

||||

}

|

||||

elseif ( $v2 -ne $null ) {

|

||||

Write-Host("Saving v2-inference.yaml as $output_dir\last.yaml")

|

||||

Copy-Item -Path "$kohya_finetune_repo_path\v2_inference\v2-inference.yaml" -Destination "$output_dir\last.yaml"

|

||||

}

|

||||

}

|

||||

@ -24,11 +24,11 @@ $ErrorActionPreference = "Stop"

|

||||

.\venv\Scripts\activate

|

||||

|

||||

# create caption json file

|

||||

python D:\kohya_ss\diffusers_fine_tuning\merge_captions_to_metadata.py `

|

||||

python D:\kohya_ss\finetune\merge_captions_to_metadata.py `

|

||||

--caption_extention ".txt" $train_dir"\"$training_folder $train_dir"\meta_cap.json"

|

||||

|

||||

# create images buckets

|

||||

python D:\kohya_ss\diffusers_fine_tuning\prepare_buckets_latents.py `

|

||||

python D:\kohya_ss\finetune\prepare_buckets_latents.py `

|

||||

$train_dir"\"$training_folder `

|

||||

$train_dir"\meta_cap.json" `

|

||||

$train_dir"\meta_lat.json" `

|

||||

@ -43,7 +43,7 @@ $repeats = $image_num * $dataset_repeats

|

||||

$max_train_set = [Math]::Ceiling($repeats / $train_batch_size * $epoch)

|

||||

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\diffusers_fine_tuning\fine_tune.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\finetune\fine_tune.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--in_json $train_dir"\meta_lat.json" `

|

||||

--train_data_dir=$train_dir"\"$training_folder `

|

||||

@ -58,7 +58,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\

|

||||

--train_text_encoder `

|

||||

--save_precision="fp16"

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\diffusers_fine_tuning\fine_tune_v1-ber.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\finetune\fine_tune.py `

|

||||

--pretrained_model_name_or_path=$train_dir"\fine_tuned\last.ckpt" `

|

||||

--in_json $train_dir"\meta_lat.json" `

|

||||

--train_data_dir=$train_dir"\"$training_folder `

|

||||

@ -74,7 +74,7 @@ accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\

|

||||

|

||||

# Hypernetwork

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\diffusers_fine_tuning\fine_tune_v1-ber.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process D:\kohya_ss\finetune\fine_tune.py `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--in_json $train_dir"\meta_lat.json" `

|

||||

--train_data_dir=$train_dir"\"$training_folder `

|

||||

|

||||

@ -8,7 +8,7 @@ $pretrained_model_name_or_path = "D:\models\512-base-ema.ckpt"

|

||||

$data_dir = "D:\models\dariusz_zawadzki\kohya_reg\data"

|

||||

$reg_data_dir = "D:\models\dariusz_zawadzki\kohya_reg\reg"

|

||||

$logging_dir = "D:\models\dariusz_zawadzki\logs"

|

||||

$output_dir = "D:\models\dariusz_zawadzki\train_db_fixed_model_reg_v2"

|

||||

$output_dir = "D:\models\dariusz_zawadzki\train_db_model_reg_v2"

|

||||

$resolution = "512,512"

|

||||

$lr_scheduler="polynomial"

|

||||

$cache_latents = 1 # 1 = true, 0 = false

|

||||

@ -41,7 +41,7 @@ Write-Output "Repeats: $repeats"

|

||||

cd D:\kohya_ss

|

||||

.\venv\Scripts\activate

|

||||

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db_fixed.py `

|

||||

accelerate launch --num_cpu_threads_per_process $num_cpu_threads_per_process train_db.py `

|

||||

--v2 `

|

||||

--pretrained_model_name_or_path=$pretrained_model_name_or_path `

|

||||

--train_data_dir=$data_dir `

|

||||

|

||||

@ -1,6 +0,0 @@

|

||||

$date = Read-Host "Enter the date (yyyy-mm-dd):" -Prompt "Invalid date format. Please try again (yyyy-mm-dd):" -ValidateScript {

|

||||

# Parse the date input and return $true if it is in the correct format,

|

||||

# or $false if it is not

|

||||

$date = [DateTime]::Parse($_)

|

||||

return $date -ne $null

|

||||

}

|

||||

1059

fine_tune.py

Normal file

1059

fine_tune.py

Normal file

File diff suppressed because it is too large

Load Diff

1

finetune.bat

Normal file

1

finetune.bat

Normal file

@ -0,0 +1 @@

|

||||

.\venv\Scripts\python.exe .\finetune_gui.py

|

||||

@ -8,8 +8,10 @@

|

||||

import warnings

|

||||

warnings.filterwarnings("ignore")

|

||||

|

||||

from models.vit import VisionTransformer, interpolate_pos_embed

|

||||

from models.med import BertConfig, BertModel, BertLMHeadModel

|

||||

# from models.vit import VisionTransformer, interpolate_pos_embed

|

||||

# from models.med import BertConfig, BertModel, BertLMHeadModel

|

||||

from blip.vit import VisionTransformer, interpolate_pos_embed

|

||||

from blip.med import BertConfig, BertModel, BertLMHeadModel

|

||||

from transformers import BertTokenizer

|

||||

|

||||

import torch

|

||||

@ -929,7 +929,7 @@ class BertLMHeadModel(BertPreTrainedModel):

|

||||

cross_attentions=outputs.cross_attentions,

|

||||

)

|

||||

|

||||

def prepare_inputs_for_generation(self, input_ids, past=None, attention_mask=None, encoder_hidden_states=None, encoder_attention_mask=None, **model_kwargs):

|

||||

def prepare_inputs_for_generation(self, input_ids, past=None, attention_mask=None, **model_kwargs):

|

||||

input_shape = input_ids.shape

|

||||

# if model is used as a decoder in encoder-decoder model, the decoder attention mask is created on the fly

|

||||

if attention_mask is None:

|

||||

@ -943,8 +943,8 @@ class BertLMHeadModel(BertPreTrainedModel):

|

||||

"input_ids": input_ids,

|

||||

"attention_mask": attention_mask,

|

||||

"past_key_values": past,

|

||||

"encoder_hidden_states": encoder_hidden_states,

|

||||

"encoder_attention_mask": encoder_attention_mask,

|

||||

"encoder_hidden_states": model_kwargs.get("encoder_hidden_states", None),

|

||||

"encoder_attention_mask": model_kwargs.get("encoder_attention_mask", None),

|

||||

"is_decoder": True,

|

||||

}

|

||||

|

||||

22

finetune/blip/med_config.json

Normal file

22

finetune/blip/med_config.json

Normal file

@ -0,0 +1,22 @@

|

||||

{

|

||||

"architectures": [

|

||||

"BertModel"

|

||||

],